In a new paper published in BMJ’s Journal of Medical Ethics, the TAS functionality nodes’ Jonathan Ives, John Downer and Helen Smith explore the increased use of AI in healthcare and medical settings, and the lack of professional guidance around it.

Although AI has great potential to help improve medical care and alleviate the burden on healthcare workers, the authors argue that as there is no precedent for when AI or AI-influenced medical workers make a mistake, regulation should be developed as a priority to outline the rights and expectations of those working closely with it.

There have recently been reports from National Health Service AI Lab & Health Education England which focus on healthcare workers’ understanding and confidence in AI clinical decision support systems, and are concerned with developing trust in, and the trustworthiness of these systems. However while they offer guidance to aid developers and purchasers of such systems, they offer little specific guidance for the clinical users who will be required to use them in patient care.

The clinicians who will have to decide whether or not to enact an AI’s recommendations are subject to the requirements of their professional regulatory bodies in a way that AIs (or AI developers) are not. This means that clinicians carry responsibility for not only their own actions, but also the effect of the AI that they use to inform their practice.

The paper argues that clinical, professional and reputational safety will be risked if this deficit of professional guidance for clinicians, and that this should be introduced urgently alongside the existing training for clinical users.

The authors end with a call to action for clinical regulators: to unite to draft guidance for users of AI in clinical decision-making that helps manage clinical, professional and reputational risks.

More information

Read the full paper:

http://dx.doi.org/10.1136/jme-2022-108831

Read a blog about the paper on BMJ

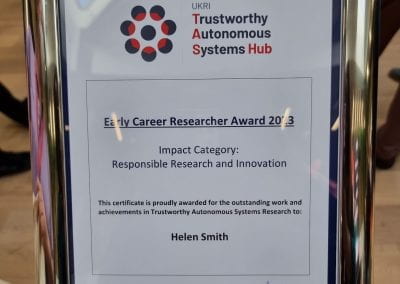

All authors are fully or part funded via the UKRI’s Trustworthy Autonomous Systems Node in Functionality under grant number EP/V026518/1.

Helen Smith is additionally supported by the Elizabeth Blackwell Institute, University of Bristol via the Wellcome Trust Institutional Strategic Support Fund.

Jonathan Ives is in part supported by the NIHR Biomedical Research Centre at University Hospitals Bristol and Weston NHS Foundation Trust and the University of Bristol. The views expressed in this publication are those of the authors and not necessarily those of the NHS, the National Institute for Health Research or the Department of Health and Social Care.