Thank You and farewell from the TAS Functionality Node.

As the Trustworthy Autonomous Systems Functionality Node project comes to an end, we want to express our sincere gratitude to everyone who supported and engaged with our research over the past three years. This interdisciplinary project brought together experts across robotics, computer science, ethics, law, and social science to investigate the challenges of developing trustworthy autonomous systems with evolving functionality.

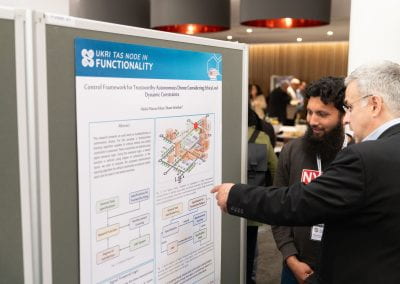

One of our key findings is that giving autonomous systems the ability to adapt and change their functionality over time raises significant considerations for how these systems need to be specified, designed, verified, and regulated to ensure they remain safe, reliable, and ethical. We developed novel approaches in areas like formal verification of swarm robotics, design guidelines for soft robots with evolving properties, and regulatory frameworks for autonomous systems that can learn and change during operation. For a broader list of outputs please have a look at our post here or explore our website.

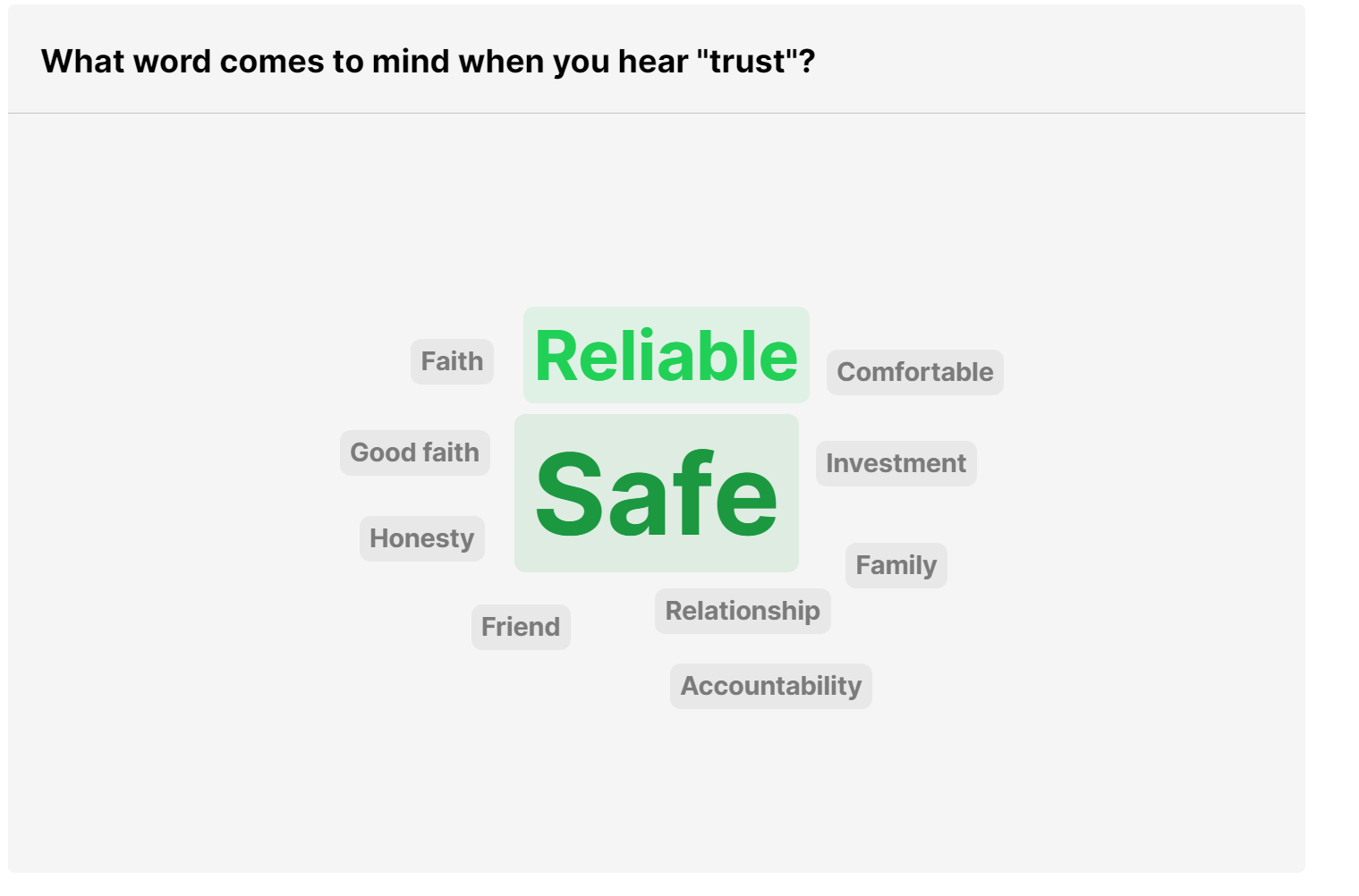

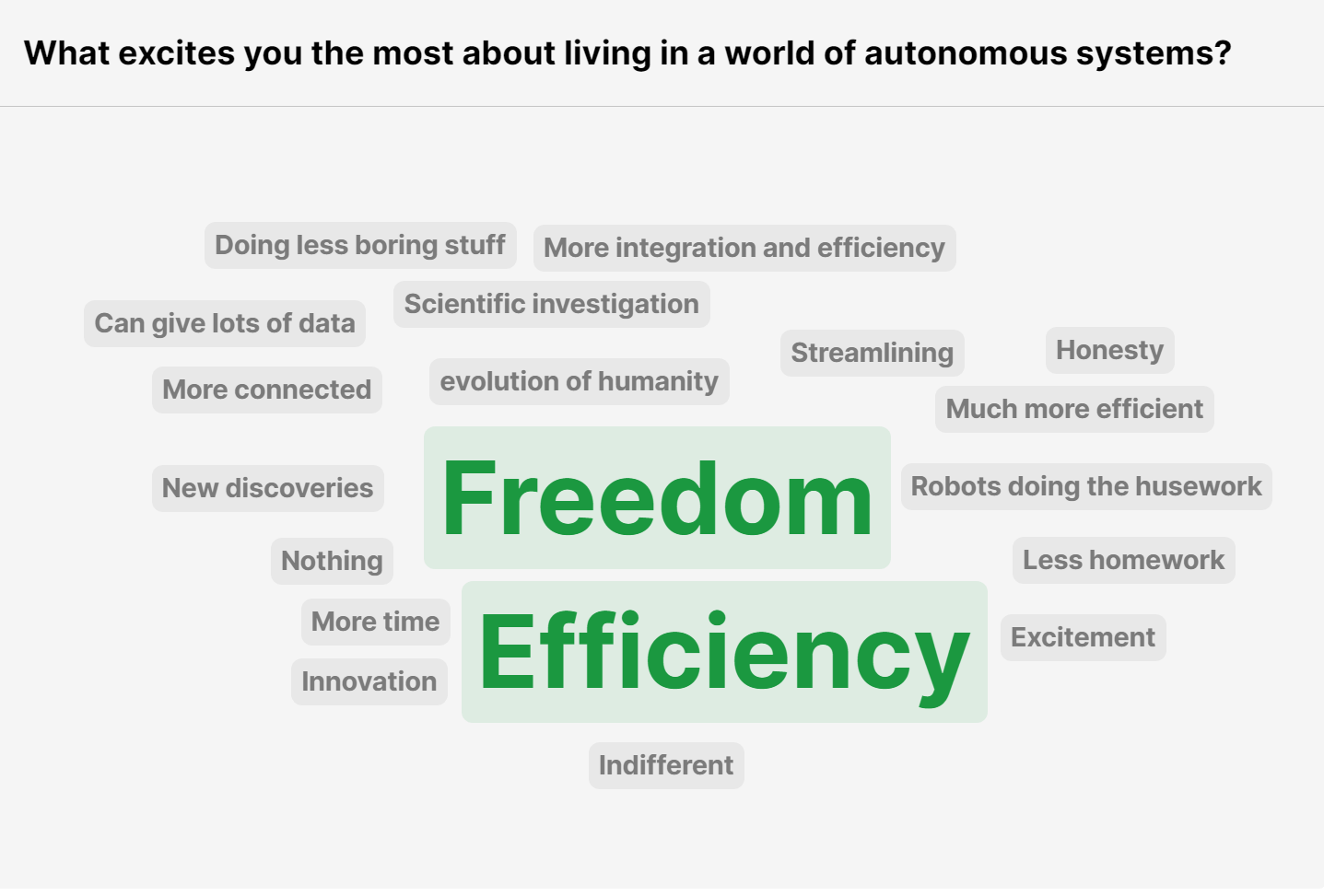

Throughout the project, we strove to explore not just the technical aspects, but the broader societal contexts and implications of autonomous systems with evolving functionality. Our work highlighted the importance of public understanding, transparency, and accountability as these technologies become more prevalent. Ethical issues around trust, consent, and moral responsibility were critically examined.

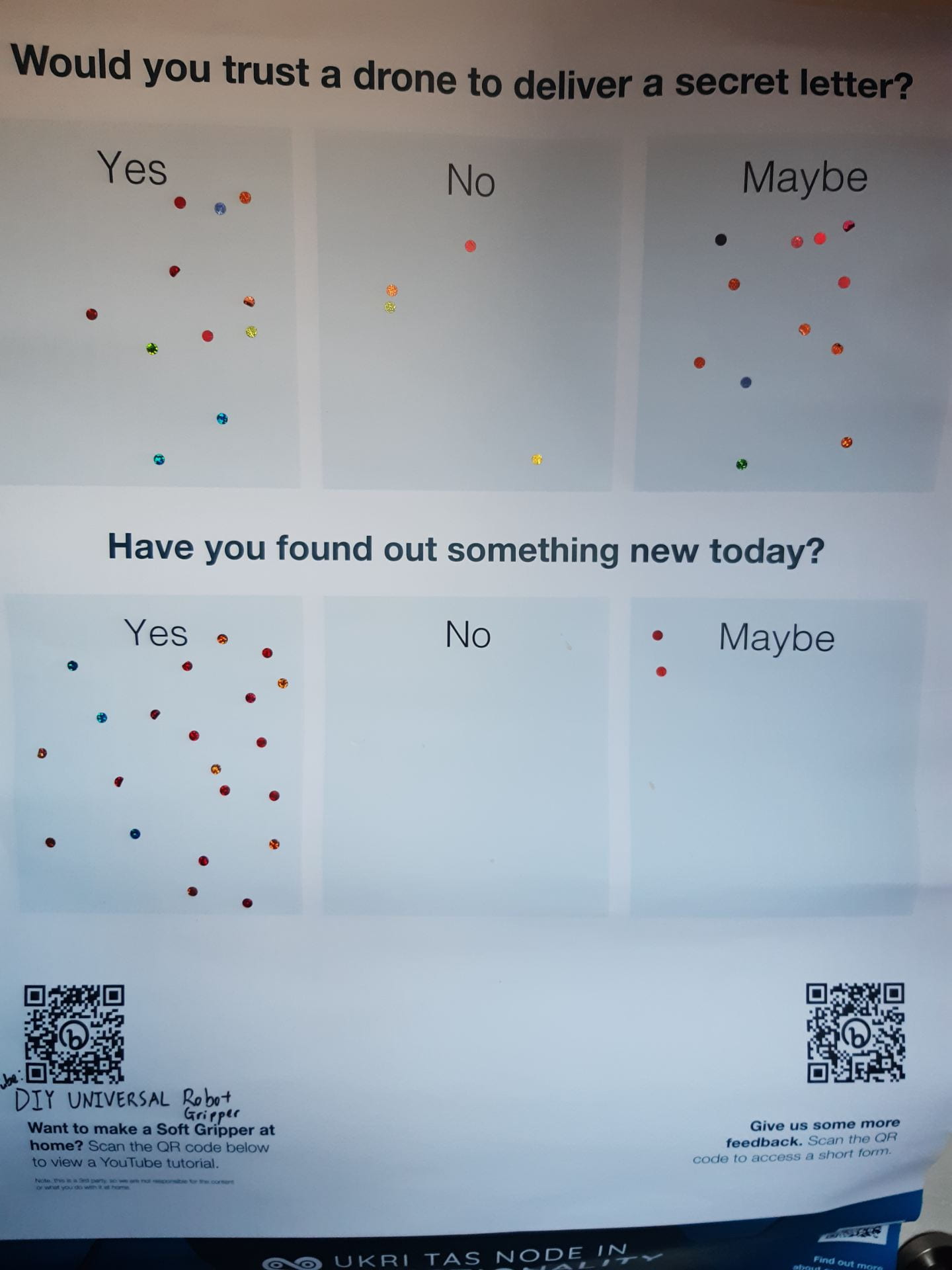

Engaging diverse stakeholders was vital to our research. We held numerous public engagement events, including interactive demonstrations, hands-on workshops, podcasts, and panel discussions. Connecting with members of the public, industry partners, policymakers, and other scholars enriched our perspectives and shaped the responsible development of trustworthy autonomous systems.

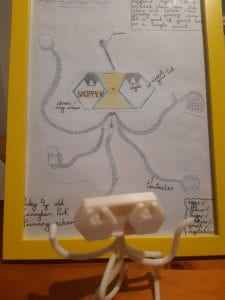

Recently we talked to members of the public about our research at the Festival of Tomorrow, and as part of that ran a Design a Robot competition for students at the Deanery Academy and Covingham Park primary – check out our blog here to find details of the entries and what they had to do. The winner at primary level was the shopper robot from Ruby- one of her prizes was a 3D model of her robot.

As we wrap up this chapter, we are immensely grateful to our partners, the broader TAS Hub community, and everyone who participated in and supported this important work. The future of evolving autonomous systems remains full of possibilities and challenges that will require ongoing multidisciplinary collaboration. We hope the foundations laid by this project will pave the way for continued innovation and dialogue in this dynamic field.

Please check out our video below, and follow us on our twitter to see the longer version, coming soon.